David Espindola - Editor and Curator

Back to Human Essentials in an AI-Driven World

Dear Nexus Reader,

AGI is no longer a distant thought experiment—it’s a deadline with a debate attached. The real question isn’t simply when we’ll reach it, but whether our institutions, our culture, and our inner lives are prepared for what follows.

This month’s issue captures the tension clearly. Shane Legg—co-founder and Chief AGI Scientist at Google DeepMind—suggests a 50/50 chance of “minimal AGI” by 2028. In sharp contrast, a Futurism.com piece argues that large language models will never be intelligent. You can pick a side and argue all day, but the more urgent question sits underneath both claims: to what extent do we trust the machines we’re rapidly weaving into daily life?

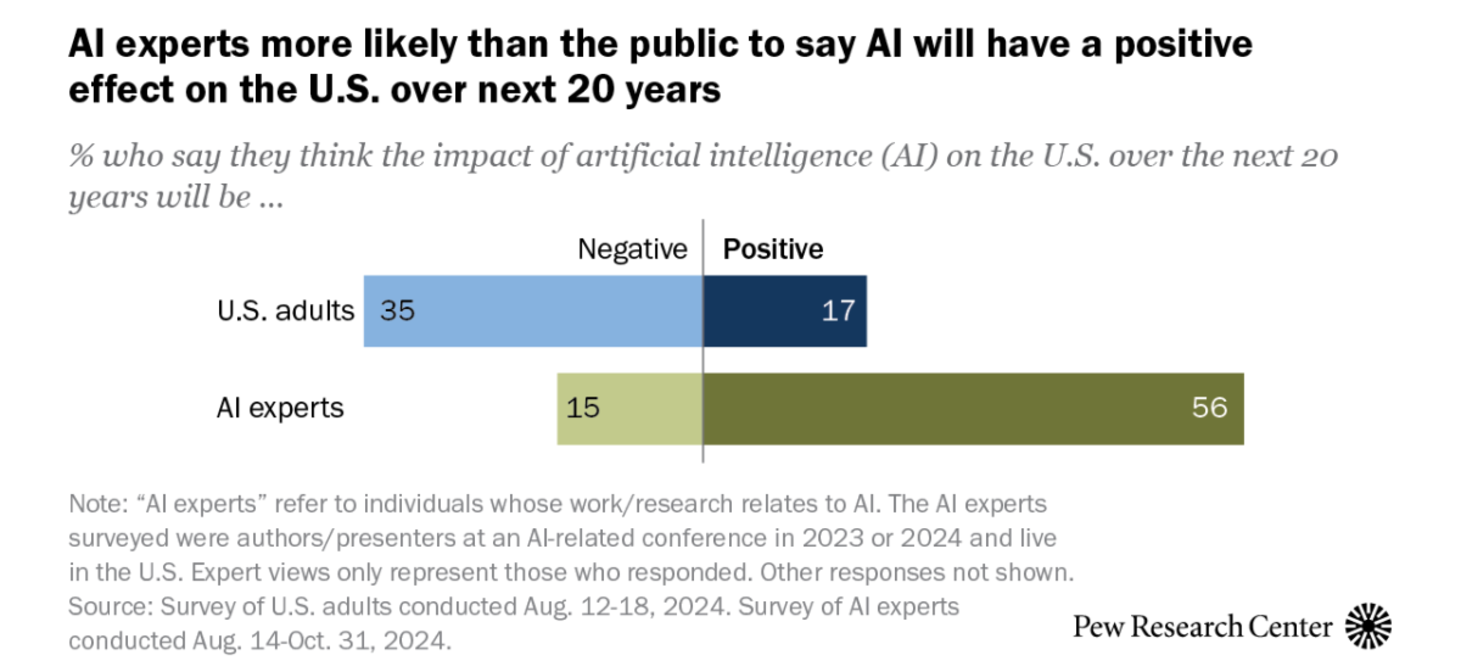

The trust gap is already visible. The 2025 Edelman Trust Barometer (Flash Poll) points to shaky confidence in AI, and Pew Research Center findings suggest a widening perception divide—AI experts tend to be more optimistic than the public. That mismatch matters, because it shapes adoption, regulation, resistance, and ultimately social cohesion.

Here’s the shift I’m watching most closely: as AI accelerates, the discourse is drifting back toward the human essentials. Satya Nadella puts it bluntly: IQ without EQ is a waste. Author Yalela Raber reminds us there is no AI without human intelligence (HI). And across the research and commentary, a consistent theme emerges: AI can deliver speed, personalization, and accessibility—but it still struggles with nuance, cultural awareness, moral context, and empathy.

We close—intentionally—with Emotional Intelligence. An Entrepreneur.com article makes the case that EQ may become the most potent force in the future of business and human performance. When empathy becomes a KPI and sincerity becomes a strategy, we don’t just improve outcomes—we strengthen the social fabric that makes progress worth having.

As we embark on a new year of exploring the frontiers of intelligence, I leave you with a simple invitation: as AI grows more capable, let’s become more capable humans—more discerning in what we delegate, more intentional in what we preserve, and more courageous in what we choose to become.

Warmly,

David Espindola

Editor, Nexus: Exploring the Frontiers of Intelligence

Nexus Deep Dive - Episode 19

If you prefer to consume the content of this publication in audio, head over to Nexus Deep Dive and enjoy the fun and engaging podcast-style discussion.

Nexus Deep Dive is an AI-generated conversation in podcast style where the hosts talk about the content of each issue of Nexus.

Artificial Intelligence

The Arrival of AGI: DeepMind's Vision

This is a Google DeepMind podcast episode featuring co-founder and chief AGI scientist, Shane Legg, and host Professor Hannah Fry. The discussion centers on the concept of Artificial General Intelligence (AGI), with Legg defining it on a continuum from "minimal AGI" (human-level cognitive capability) to "full AGI" and eventually Artificial Super Intelligence (ASI). Legg makes a key prediction of a 50/50 chance of minimal AGI by 2028 and discusses the current capabilities of AI, noting its uneven performance with some areas already exceeding human ability while others still lag, like continual learning and advanced reasoning. Crucially, the conversation explores the significant societal and economic transformation that AGI will cause, emphasizing the need for proactive work on ethical reasoning and safety measures to ensure a beneficial future.

Large Language Models Will Never Be Intelligent, Expert Says

This article contests the notion that current Large Language Models (LLMs) will ever achieve true intelligence or Artificial General Intelligence (AGI), despite optimistic industry claims. The author quotes Benjamin Riley, who argues that LLMs are merely tools that emulate communicative language function and cannot replicate the separate cognitive process of human thought required for AGI. This position is supported by neuroscience research, which indicates that thinking is independent of language, and functional studies show reasoning ability is retained even when linguistic capacity is lost. Furthermore, separate analysis confirms that due to their probabilistic design, LLMs possess a "hard ceiling" on their creative capacity and will be unable to generate truly novel outputs, limiting them to producing average or formulaic results. This skepticism about language-only models is shared by influential figures in the field, such as Yann LeCun, who advocates for the development of non-language-based "world models" instead.

Trusting the Machine: 2025 AI Adoption Insights

The 2025 Edelman Trust Barometer Flash Poll reveals that the adoption of new technologies faces serious challenges, primarily because trust in artificial intelligence lags significantly behind general faith in the technology sector. Survey results indicate deep geographic and socioeconomic divides, as developed markets resist AI's growing use compared to developing nations, and lower-income groups fear being left behind. The data makes clear that enthusiasm is not innate but is strongly driven by knowledge and personal experience, showing that when individuals, especially employees, see AI improve their productivity and problem-solving, their trust increases dramatically. Therefore, to power future growth, organizations must foster acceptance by providing high-quality training and demonstrating transparency about job impact, especially since people trust peers and scientists far more than CEOs or government leaders on this subject.

How the U.S. Public and AI Experts View Artificial Intelligence

The Pew Research Center report examines and contrasts the opinions of the U.S. public and a surveyed group of AI experts concerning the development and integration of artificial intelligence into society. A key distinction found is that experts are far more positive and enthusiastic about the expected impact of AI on jobs, the economy, and personal life over the next two decades than the general American public. Despite this enthusiasm gap, both populations share significant common ground, particularly in their worry that U.S. government regulation of AI will be insufficient and their desire for more personal control over the technology. Furthermore, both groups express high levels of concern over issues like inaccurate information and bias, and they recognize that the perspectives of men and White adults are better represented in AI design than those of other demographic groups. This analysis synthesizes data from large surveys of American adults and domestic AI professionals conducted in late 2024.

Human Intelligence

Microsoft CEO says empathy is a workplace superpower in the age of AI: 'IQ without EQ, it's just a waste'

Microsoft CEO Satya Nadella issued a stark warning to business leaders this week: intelligence alone won't be enough to succeed as artificial intelligence transforms the workplace. Speaking on the "MD Meets" podcast with Axel Springer CEO Mathias Döpfner, Nadella argued that emotional intelligence has become essential for navigating the rapidly evolving AI landscape.

"IQ has a place, but it's not the only thing that's needed in the world," Nadella said in the episode that aired November 29. "I've always felt, at least leaders, if you just have IQ without EQ, it's just a waste of IQ".

No AI without HI: Why human intelligence is the real competitive edge in the age of AI

This article titled "No AI without HI: Why human intelligence is the real competitive edge in the age of AI" by Yaela Raber, argues that Human Intelligence (HI) is the critical factor for organizational success with artificial intelligence, not the technology itself. The author posits that since access to AI tools is becoming universal, the true differentiator lies in human capacities such as judgment, ethical clarity, innovation, and risk mitigation, which AI cannot replicate. The text outlines the business case for HI, detailing six domains of HI across inner and outer layers—including the mind, body, emotions, and intuition—and explains that organizations must invest in these human capacities to ensure intelligent speed and direction as AI accelerates. Ultimately, the article advocates for a Human Intelligence Revolution where leaders consciously develop internal resources to direct AI effectively, rather than relying solely on technological advancement.

The Human Edge: Where Emotional Intelligence Meets AI in the Future of Learning | The AI Journal

This article titled "The Human Edge: Where Emotional Intelligence Meets AI in the Future of Learning," argues that Artificial Intelligence (AI) must serve to amplify, not replace, human learning in the workplace. The author emphasizes that while AI offers speed, personalization, and accessibility through tools like adaptive chatbots, it lacks essential human qualities such as nuance, cultural awareness, and empathy, which are critical for deep learning and communication. The article highlights that skills like emotional intelligence, critical thinking, and intercultural competence remain highly in-demand and are irreplaceable by machines, necessitating a shift toward human-led learning designs that focus on durable skills. Ultimately, the future of workplace development is envisioned as a human-AI symbiosis where technology supports continuous, personalized growth, but human mentors and connection transform knowledge into lasting capability and resilience. The author concludes that investing in human transformation and communication will be key for competitive organizations in the AI era.

Emotional Intelligence Will Rule the Future of Business — Not AI

Emotional intelligence has evolved from a "soft skill" to the most powerful competitive advantage in business—an invisible but irreplaceable force that drives consumer loyalty and long-term success.

Redefinition of Success

Old paradigm: Scale fastest, optimize everything

New paradigm: Scale while deepening emotional density and cultural memory

The brands that endure won't be those that shouted loudest, but those that listened deepest.

Bottom Line

In an AI-driven world, emotional intelligence remains exclusively human. Treating empathy as a KPI and sincerity as strategy creates not just profit, but permanence—because "leadership, like perfume, is invisible yet unforgettable."